RORACLE vs RJDBC

Tuesday, May 10, 2011 at 12:09PM

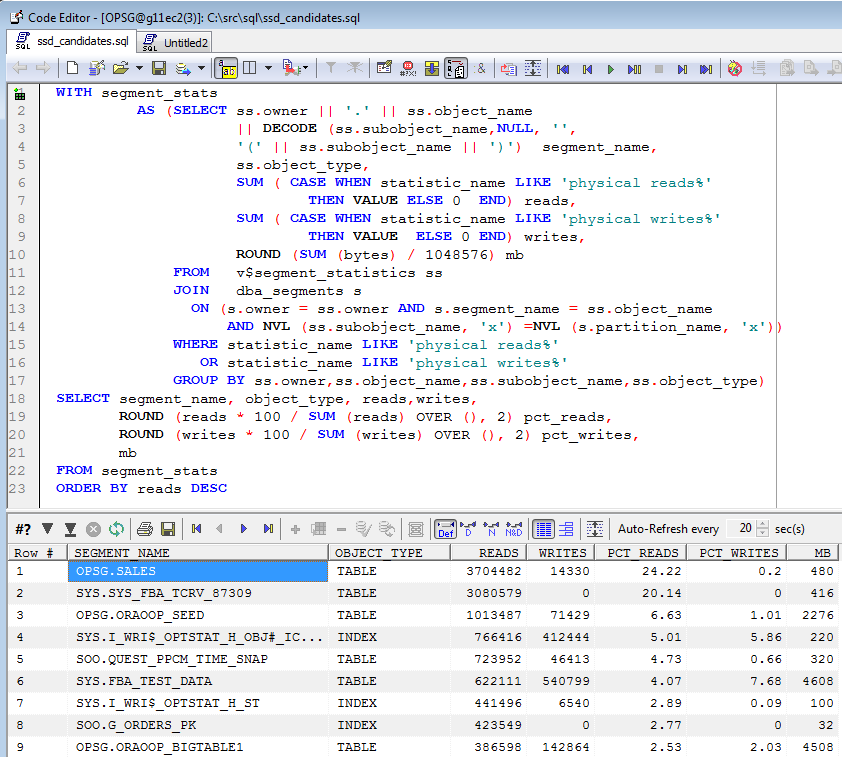

Tuesday, May 10, 2011 at 12:09PM In this post I looked at hooking up the R statistical system to Oracle for either analysing database performance data or analysing other data that happened to be stored in Oracle. A reader asked me if I’d compared the performance of RJDBC – which I used in that post - with the RORACLE package. I hadn’t, but now I have and found some pretty significant performance differences.

RJDBC hooks up pretty much any JDBC datasource into R, while ROracle uses native libraries to mediate the connection. RJDBC is probably easier to start with, but ROracle can be installed pretty easily, provided you create some client libary entries. So for me, after downloading the ROracle package, my install looked something like this (run it as root):

cd $ORACLE_HOME/bin

genclntsh

genclntst

R CMD INSTALL --configure-args='--enable-static' /root/Desktop/ROracle_0.5-9.tar.gz

It was pretty obvious right away that RJDBC was much slower than ROracle. Here’s a plot of elapsed times for variously sized datasets:

The performance of RJDBC degrades fairly rapidly as the size of the data being retrieved from Oracle increases, and would probably be untenable for very large data sets.

The RJDBC committers do note that RJDBC performance will not be optimal:

The current implementation of RJDBC is done entirely in R, no Java code is used. This means that it may not be extremely efficient and could be potentially sped up by using Java native code. However, it was sufficient for most tasks we tested. If you have performance issues with RJDBC, please let us know and tell us more details about your test case.

The default array size used by RJDBC is only 10, while the default for Roracle is 500… could this be the explaination for the differences in performance?

You can’t change the default RJDBC fetch size (at least, I couldn’t work out how to), but you can change ROracle's. Here’s a breakdown of elapsed time for RJDBC and Roracle using defaults, and for ROracle using a fetch size of 10:

As you can see, issue does not seem to be the array size alone. I suspect the overhead of building up the data frame from the result set in RJDBC is where the major inefficiency occurs. Increasing the array fetch size might reduce the impact of this, but the array fetch size alone is not the cause of the slow performance.

Conclusion

The current implementation of RJDBC is easy to install and fairly portable, but doesn’t provide good performance when loading large data sets. For now, ROracle is the better choice.

Scripts: