Moving to medium

Monday, February 5, 2018 at 9:22AM

Monday, February 5, 2018 at 9:22AM Squarespace has been a great platform, but it seems to me that medium.com has really created a network effect for blogging that overwhelms all the downsides of having to write within someone else's platform. So going forward I'll be blogging at medium at https://medium.com/@guy.harrison.

I've been running this blog on squarespace for about 7 years and for a few years before that on typepad. If you check out http://guyharrison.typepad.com/ you can see how young and beardless I was in those days! :-)

All my older material will remain on Squarespace, though where there is an updated medium article I will link to it. Mostly my new blog content is around blockchain and MongoDB technologies (at least for now). Oracle and MySQL stuff will remain here. It was next to impossible to migrate Squarespace in bulk to medium.

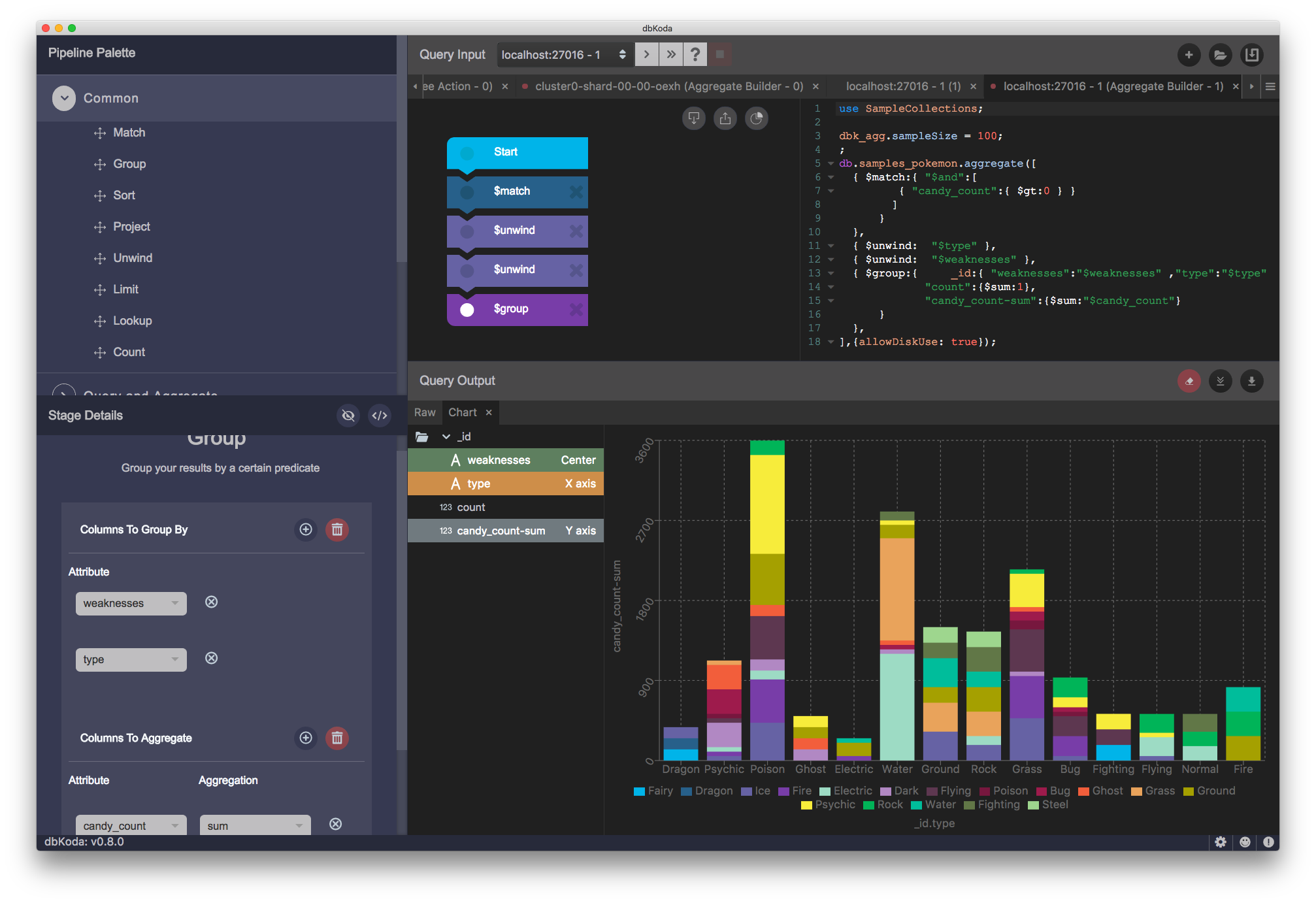

Please checkout my medium page if you've enjoyed the content here - also my little startup dbKoda has it's medium account at https://medium.com/dbkoda. You'll particularly like that if you are interested in MongoDB.

Thanks to all my squarespace readers and see you on Medium!