“Stolen” CPU on Xen-based virtual machines

Monday, July 12, 2010 at 5:07PM

Monday, July 12, 2010 at 5:07PM I’ve written previously about how VMWare ESX manages CPU and how to measure your“real” CPU consumption if you are running an database in such a VM.

VMware is currently the most popular virtualization platform for Oracle database virtualization, but Oracle’s own Oracle Virtual Machine uses the open source Xen hypervisor, as does Amazon’s Elastic Compute Cloud (EC2): which runs quite a few Oracle databases. So Oracle databases – and many other interesting workloads – will often be found virtualized inside a Xen-based VM.

I recently discovered that there is an easy way to view CPU overhead inside a Xen VM, at least if you are running a paravirtulized linux kernel 2.6.11 or higher. In this case, both vmstat and top support an “St” column, which describes the amount of time “stolen” from the virtual machine by Xen. This stolen time appears to be exactly analogous to VMWare ESX ready time – it represents time that the VM was ready to run on a physical CPU, but that CPU was being allocated to other tasks – typically to another virtual machine.

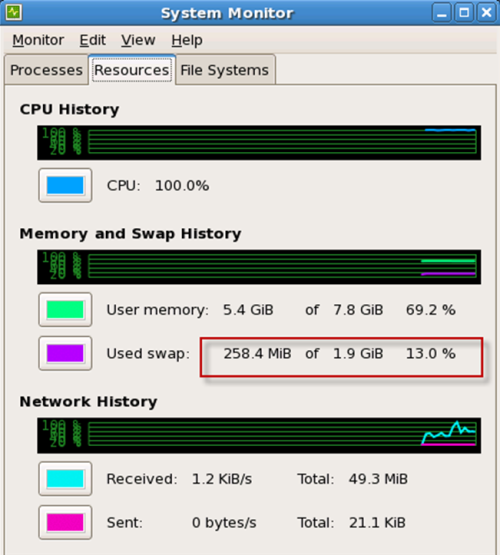

Here we see in top (on an Oracle Enterprise Linux on an EC2 instance) reporting that 13% of the CPU has been unavailable to the VM due to virtualization overhead. Note that the graphical system monitor doesn’t reflect this – as far as it’s concerned CPU utilization has been at a steady 100%.

The great thing here is that you can view the overhead from within the virtual machine itself. This is because in a paravirtualized Operating system – which are the norm in Xen based systems - the kernel is rewritten to be virtualization aware. The paravirtualized Linux kernel – from 2.6.11 – includes changes to vmstat and top to show the virtualization overhead. In ESX you have to connect to the VSphere client or use one of the VMWare APIs to get this information.

As with ESX, unless you know the virtualization overhead you can’t really interpret CPU utilization correctly. For instance if your database is CPU bound and you get a sudden spike in response time, you need to know if that spike was caused by “stolen” CPU. So you should keep track of the ESX ready statistic or the Xen “stolen” statistic whenever you run a database (or any critical workload for that matter) in a VM.

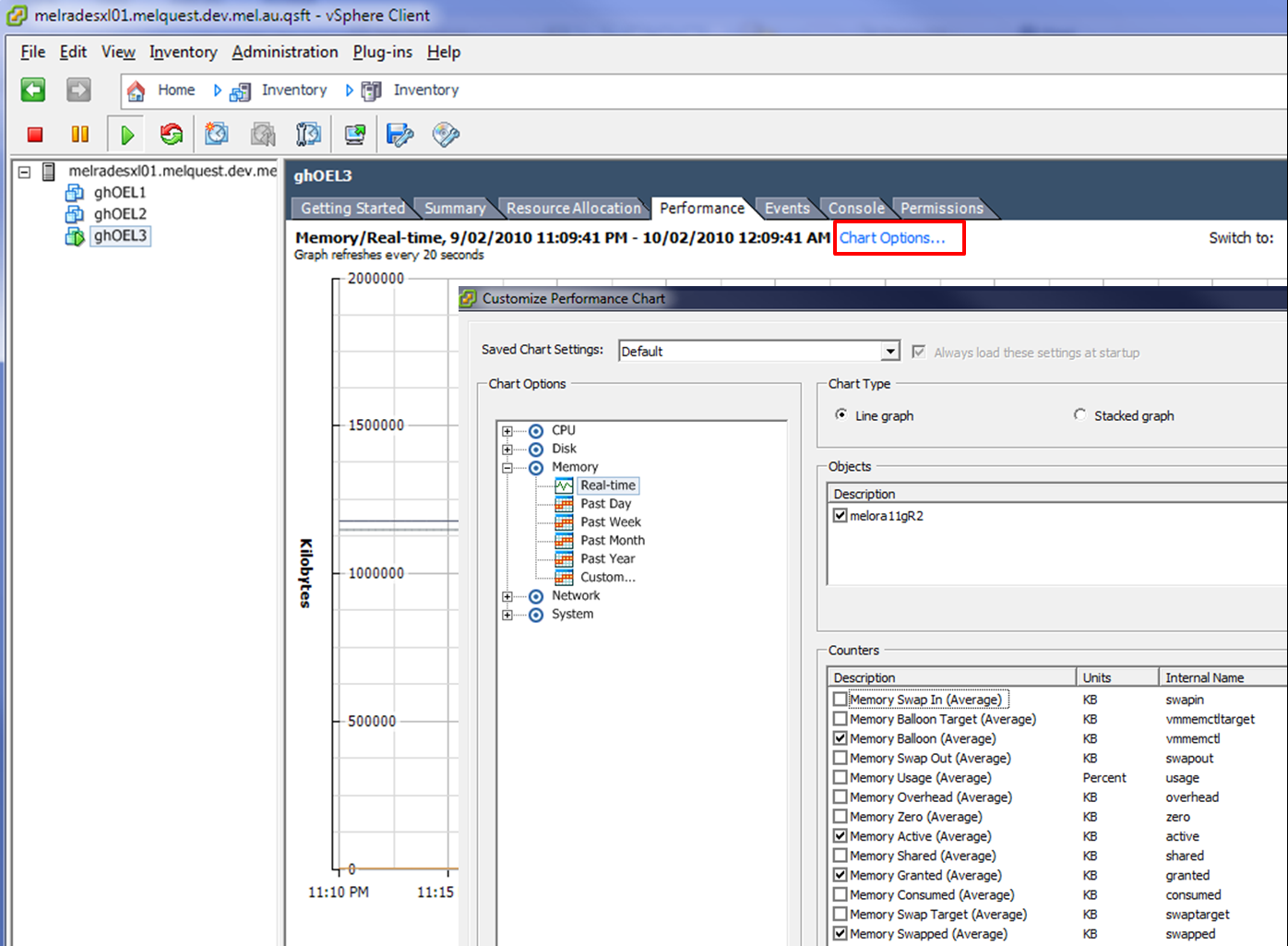

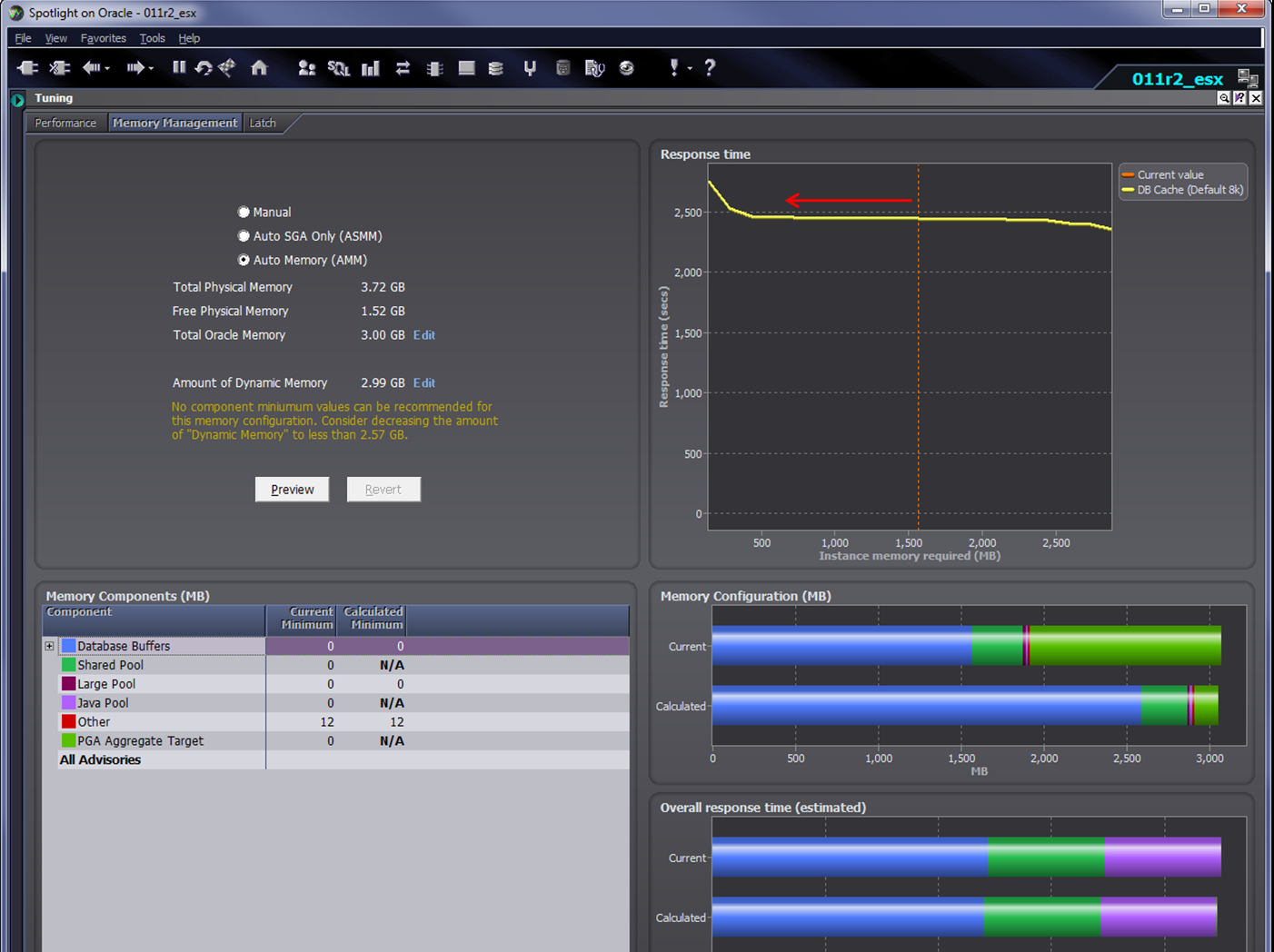

We just introduced ESX support in the upcoming release of Spotlight on Oracle. Starting with release 7.5 (which has just been made available with the latest release of Toad DBA suite) we show the virtualization overhead right next to CPU utilization and provide a drilldown giving you details of how the VM is serving your database:

We plan to add support for monitoring Xen-based virtualized databases in an upcoming release.

Oracle,

Oracle,  virtualization,

virtualization,  vmware in

vmware in  Oracle

Oracle