More on the database flash cache

Tuesday, December 1, 2009 at 11:56AM

Tuesday, December 1, 2009 at 11:56AM I'm eagerly awaiting my high-performance flash SSD (an Intel X-25 E), but in the meantime I've played a bit more with the database flash cache on the cheap hardware setup described in my last post. Sometimes it can be useful to test new features on slow hardware, since you observe phenomenon that don't occur when everything is running full speed.

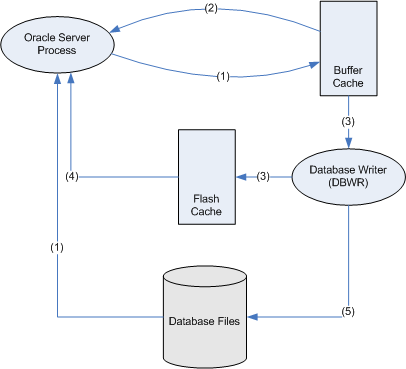

I originally naively imagined that blocks would be copied into the flash cache by the Oracle server process . Eg, that if I read from disk, I deposit the block in both the buffer cache or the flash cache. However, upon investigation it appears that blocks are moved from the buffer cache to the flash cache by the DBWR as they are about to be flushed from the buffer cache.

This is of course, a much better approach. The DBWR can write to the flash cache asynchronously, so that user sessions get the benefit - less time reading from magnetic disk - without having to wait while blocks are inserted into the flash cache.

So the lifecycle of a block looks something like this:

- The Oracle server process reads a file from disk and adds it to the buffer cache

- If a session wants that block later and it's still in the buffer cache, they can read it from the buffer cache

- Before the block leaves the buffer cache the DBWR will write it to the flash cache (if the DBWR is not too busy)

- If a session want a block later and it's still in the flash cache, then they will read it from the flash cache (and presumably place it back in the buffer cache)

- If the block is modified, the DBWR will eventually write it back to the disk. (Q: What happens to any unmodified copies of that block in the flash cache?)

DBWR and the flash cache

Reading from flash is fast - but writing is much slower. Therefore, in order to avoid performance issues, the DBWR should:

- Not write to flash unless it has to and

- Not write to flash at all if it will get in the way of other more important activities.

To the first point, DBWR does not appear to populate the flash cache until blocks are about to be flushed. In other words, the DBWR doesn't seem to write a buffer to the flash cache just in case it is going to be flushed, but only if it is actually (or maybe probably) going to be flushed. We do not observe writes to the flash cache when blocks are brought into the buffer cache unless other blocks are also being flushed out.

Secondly, the DBWR won't write to flash cache - and won't delay blocks from being flushed - if it is busy writing dirty blocks to disk. The DBWR must clear dirty blocks from the cache as quickly as possible, otherwise "free buffer waits" will prevent new blocks from being introduced to the buffer cache. If blocks are about to be flushed from the buffer cache but the DBWR is busy then the blocks will not get written to the flash cache, and the statistic 'flash cache insert skip: DBWR overloaded' will be incremented.

Measuring db flash cache effectiveness

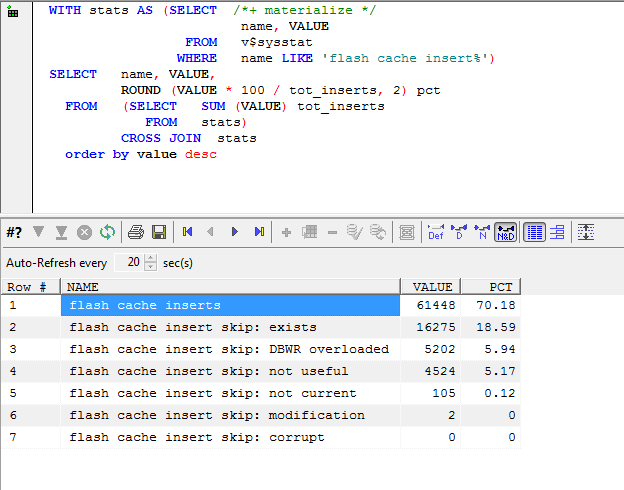

The following query reports on how often a busy DBWR is forced to skip flash cache inserts because it is too busy or for other reasons:

The "DBWR overloaded" statistic I think we understand - the DBWR is busy writing dirty blocks to disk and hasn't the bandwidth to write clean blocks to the flash cache.

"flash cache insert skip: exists" is also easy to understand. If we read a block back from the flash cache into the buffer cache, then it remains in the flash cache. There's no need to write it to the flash cache again should it again age out of the buffer cache.

"not current" probably means that the DBWR is aware of a more recent copy of the block on disk or in the buffer cache and for that reason is going to decline to write a version to flash.

"not useful" I do not understand....

The biggest takeaway here is - I think:

On a system with a very busy DBWR, the db flash cache may be less effective.

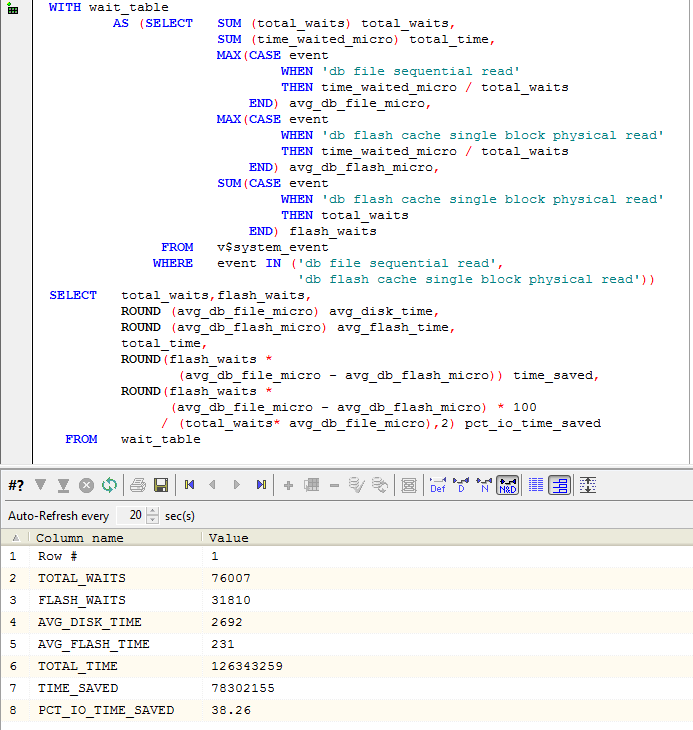

To measure the benefit from the flash cache we can compute the number of flash cache reads that avoided a physical read and multiply by the average time for each type of read. This query attepts to do that though only for single block reads:

Conclusion

The architecture of the DB flash cache allows us to relax a bit regarding the relatively poor write performance that is associated with flash SSD. If we place a datafile on flash SSD, we risk creating a free buffer wait bottleneck: the DBWR will stall trying to write blocks to the slow (in terms of write latency) flash drive and so we might actually get worst performance compared to magnetic disk - at least if we have a high write rate. But with the flash cache, the DBWR only writes when it has free time. Consequentially, there should be little real down side - the worst that can happen is that the DBWR is too busy to populate the flash cache and so it becomes less useful.

The efficiency of the db flash cache is going to depend on two main factors:

- Does the DBWR have enough free time to write to the flash cache?

- How long does it take to write to the flash device?

The answer to the first question depends on the current rate of DBWR activity which in turn probably depends on how much IO write bandwidth exists on your disk array. But if you have the sort of database that suffers from or is on the verge of suffering from free buffer waits, then probably the db flash cache won't work too well because the DBWR will never be able to write to it.

The answer to the second question depends on the quality of the flash SSD. You generally want to use Flash disks which have the lowest possible write latency. That usually means support for the TRIM API call, and flash that uses Single Level Cells (SLC).

Reader Comments (7)

In Step #3 in the oracle’s implementation, is the block chosen for writing into the flash cache a clean block or can it be a modified block.

What is the behavior if the SSD device is removed when the server is operational and online?

When the server is shut down and then restarted, do they reuse the data on SSD? What if SSD is gone?

Is Oracle positioning this feature towards enterprise-class SSDs only or do they assume a user use this feature with a range of SSD classes and vendors?

To answer David's question:

By supporting SSD as a secondary cache, and an optional cache at that, Oracle is trying to ensure that the performance doesn't backfire if the SSD is a poor performer. Pretty much all flash SSD is poor at writes but some - cheap MLC drives without TRIM support - are particularly bad. However, the DBWR will only write to the flash cache if it can do so without slowing down normal writes to the datafiles. If the SSD is cheap, it will do no harm, but some data wan't make it to cache so the gains will not be as good.

I'm going to do a post soon that will elaborate on this. But the short answer is that I think your db flasch cache "hit rate" will be lower if you have a slow SSD because the DBWR won't be able to write as many blocks in. So the better the drive the more the gain, but it should give some improvement even on very cheap SSD.

TO answer "Curious":

I believe that the block in the flash cache could be modified, but that can only happen if the DBWR has written it to the datafile. So (I believe) the block could be uncommitted, but not "dirty" in the sense that the modification only exists in the cache. The block cannot leave the primary cache until it is written to the datafile after all.

When the database reboots the contents of the database flash cache will be lost, AFAIK. I've not tested this directly, but I'd be amazed if it were any other way. I do know that the database will restart fine if the SSD is removed, providing that you change your DB_FLASH_CACHE_FILE parameter to reflect this. The database won't start if that parameter refers to a non-existent file.

I've also not tried removing the SSD when the database is running; my SSD is now a SATA disk, not a thumb drive. I will try this with a USB flash drive when I get a chance. Should be interesting.....

If I setup the cache on a removable device and actually remove it, then Oracle will disable the cache and keep running.

You'll see messages like this in the alert log:

17/01/2010 10:02:07 PM Sun Jan 17 22:02:07 2010

Errors in file /oracle/diag/rdbms/g11r2gac/G11R2GAC/trace/G11R2GAC_ora_7987.trc:

ORA-27072: File I/O error

Linux Error: 5: Input/output error

Additional information: 4

Additional information: 115937

Additional information: -1

Encountered Error 17611 repeatedly while accessing Flash Cache. Disabling..

17/01/2010 10:03:08 PM Sun Jan 17 22:03:08 2010

L2 cache file closed by dbwr 0

L2 cache disabled for dbwr 0

I have translated this blog entry into Chinese.

http://www.dbthink.com/?p=135

Is any particular reason why you did not include scattered read waits to the last query that measures Flash Cache efficiency?

IMO both of them should be included, as cache buffer contains both buffers obtained during indexed access and FTS.

Thanks for great post!

Hey sorry for the delay in replying. So much spam comments I find it hard to find the good stuff :-)

So yes, the flash cache will cache any buffers that come in through the buffer cache but in 11g, FTS is performed by direct path reads Therefore never gets into the cache in the first place. However that would show up as direct path read, so I could have included scattered reads - would have only included very small tablescans that were buffered though..