It's been possible for a some time to run Oracle within the Amazon cloud. I wrote an introduction to Oracle on EC2 back in 2008, and we use an EC2 pretty extensively here at quest for testing and deployment. Up until now, Oracle has been made available within EC2 instances which appear for all purposes to be remote hosts. Recently, Amazon made oracle available in a more directly cloud based fashion as one of the options to their Relational Database Service (RDS). Using RDS, Amazon takes complete responsibility for database management providing a more complete Database As A Service (DBaaS) offering, similar to what Microsoft offers with SQL Azure. Amazon handles installation, upgrades, backups and so forth. In theory, you can use the database without needing to do any administrative work.

Indeed Amazon on takes so much responsibility that long-time DBAs like me are unlikely to be really happy; the very notion of being unable to connect to the operating system for instance is disturbing, and it’s currently missing support for many of our favourite tools.

However I wanted to give it a test drive, so here's some screenshots and observations to give you a feel for it.

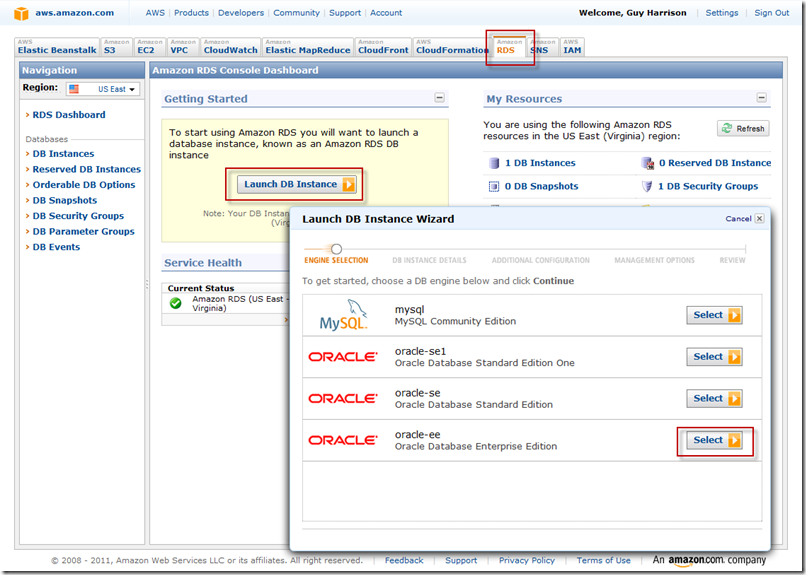

Creating the Oracle RDS database

To use any of the Amazon cloud facilities, you need and Amazon AWS account. Once you have that, you create a new RDS database from the AWS console, where you can launch a new MySQL or Oracle database:

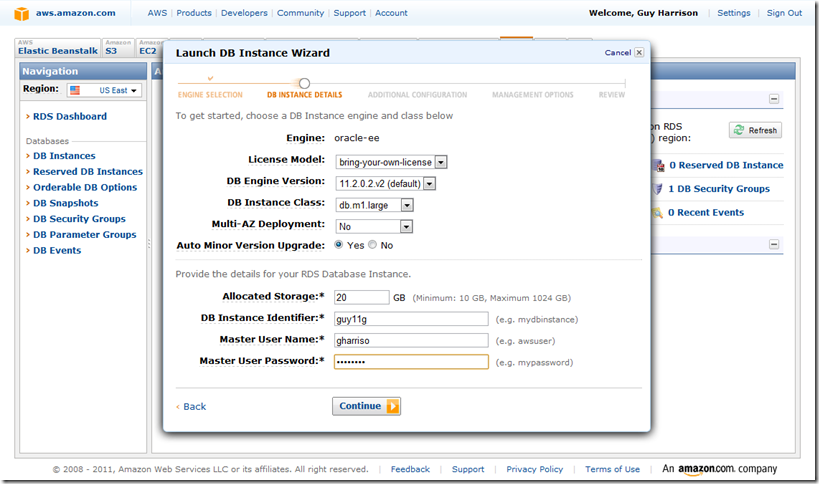

You then specify the parameters for the new instance. At the time of writing, some of these screens clearly had not been updated from the older MySQL options. Here we can specify:

- Type of license. If you have a site license you can use that (as I have below). If not you can pay Amazon by the hour and Amazon presumably kick some of that back to Oracle.

- Oracle version. Note also that you can ask to have the version updated for you with patches automatically – quite cool.

- Instance Class. This is the size of the virtual machine and determines memory and CPU availability. These match the equivalent EC2 instance types (see http://aws.amazon.com/ec2/#instance) and vary from 1 core with 1.7GB to 8 (faster) cores with 64GB). Charging varies accordingly of course.

- DB instance identifier. At first you might think that is the database service name, but NO, it’s an Amazon identifier. You specify the service name on the next page.

- Username and Password of the DBA account you’ll use.

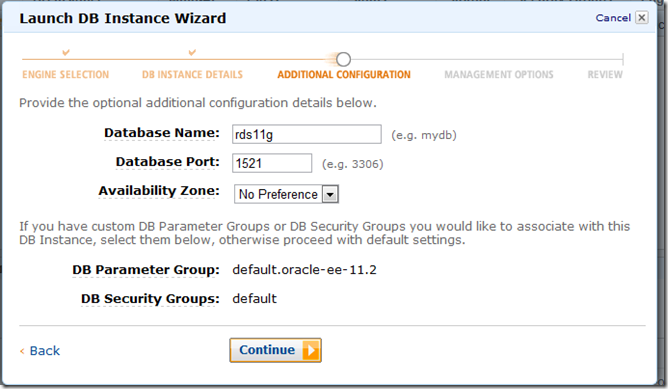

On next page we specify the database (eg Service/SID) name and the port. NOTE that at the moment the default port is 3306, which is the MySQL listener port – you should change it if you want a more familiar listener port like 1521.

Click OK a couple more times and your database will start up. Takes 5 minutes or so to get running.

Connecting to the database

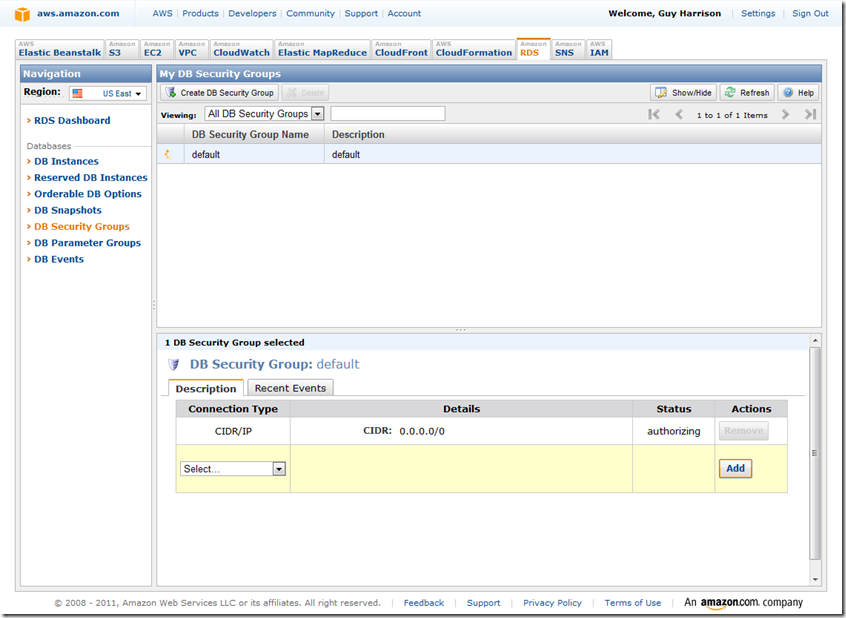

Before you can connect, however, you’ll need to create a security group which essentially defines the Amazon firewall for your instance. Below I’m configuring to let anything through (not ideal, but I travel a lot so I can’t predict my IP address range). The CDIR/IP is a mask that defines what IP addresses will be allowed to connect to the database instance.

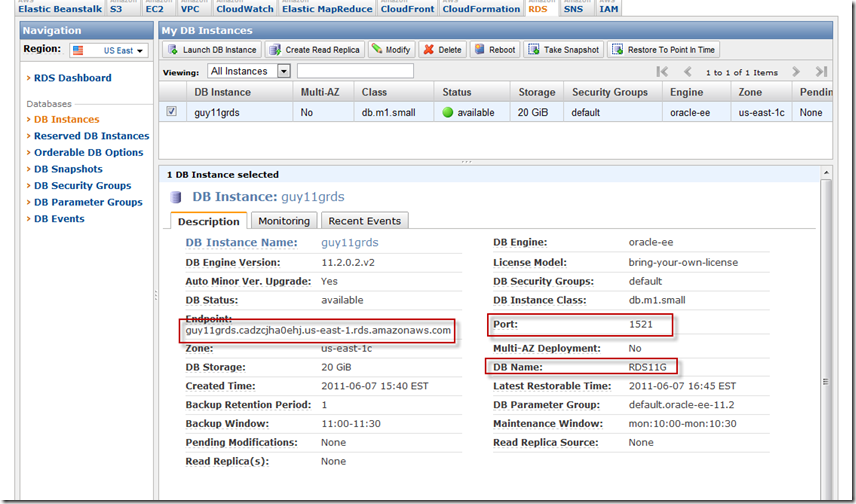

Now we can connect. We need to know the hostname, which is shown as the “Endpoint” in the DB Instance description page. The port and service name are also here:

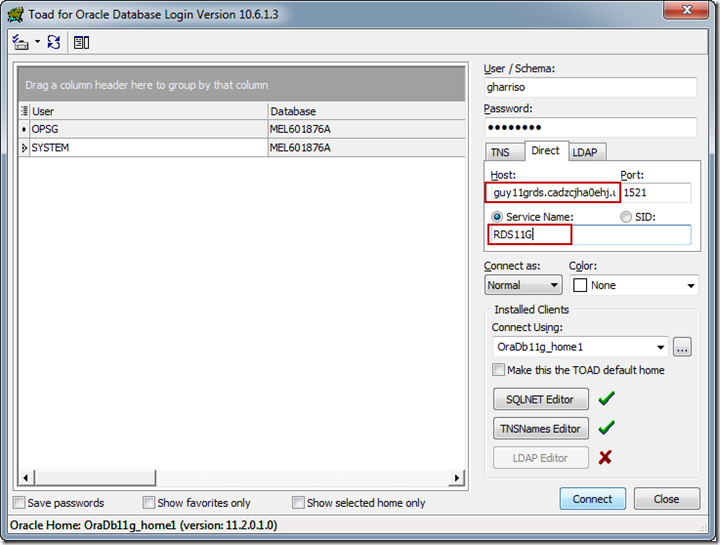

We could create a TNSNAMES entry using those details or connect via SQL Plus in the form username/password@host:port/Service. Below, I specify these details directly into TOAD:

Restrictions

As easy as it is to get started with RDS, there are a lot of restrictions. Most significantly:

- You can’t connect to the OS.

- Datafiles must be Oracle Managed Files. No filesytem specifications are allowed. ASM is not supported.

- Some commands are not supported, including ALTER SYSTEM. However there are PLSQL packages that provide a lot of the common facilities such as switching logs, checkpointing, tracing and killing sessions.

- You can only access the alert log and trace files via an external table interface

- No OEM!!! And for that matter, monitoring products such as Quest’s own Spotlight and Foglight cannot be installed (due to the above restrictions). Even some parts of TOAD won’t function since they rely on forbidden commands

The idea with RDS and these missing features is that “you ain’t going to need it” - the database is self managing and these DBA utilities simply aren’t required. For more details check out this part of the RDS manual.

Changing the database CPU and memory

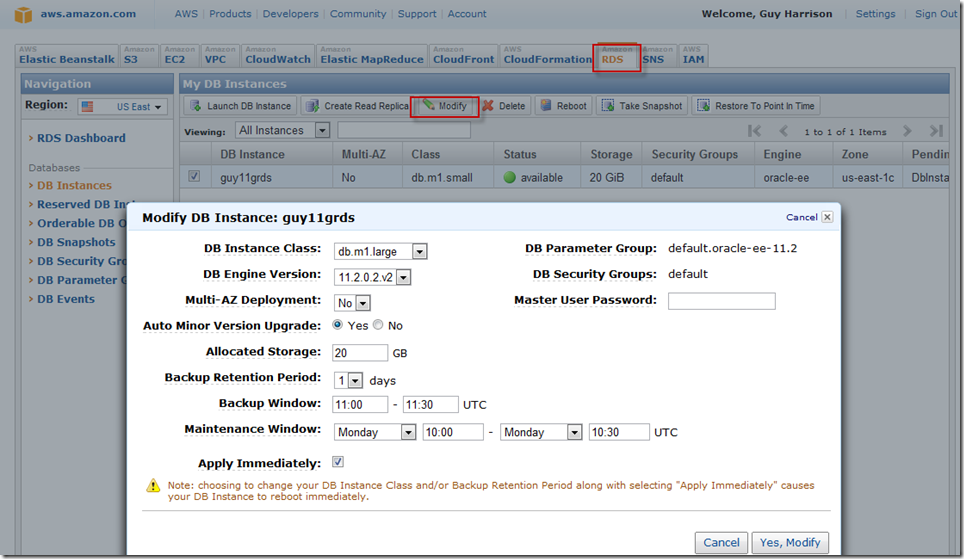

One of the great things about RDS is that you can resize the instance. If you want more memory or CPU, you can use the modify tab to change the specification:

This does require a reboot, which either happens during the maintenance window or – if you so specified – immediately. Still, much easier than achieving the same result on EC2 – which is almost impossible.

Monitoring

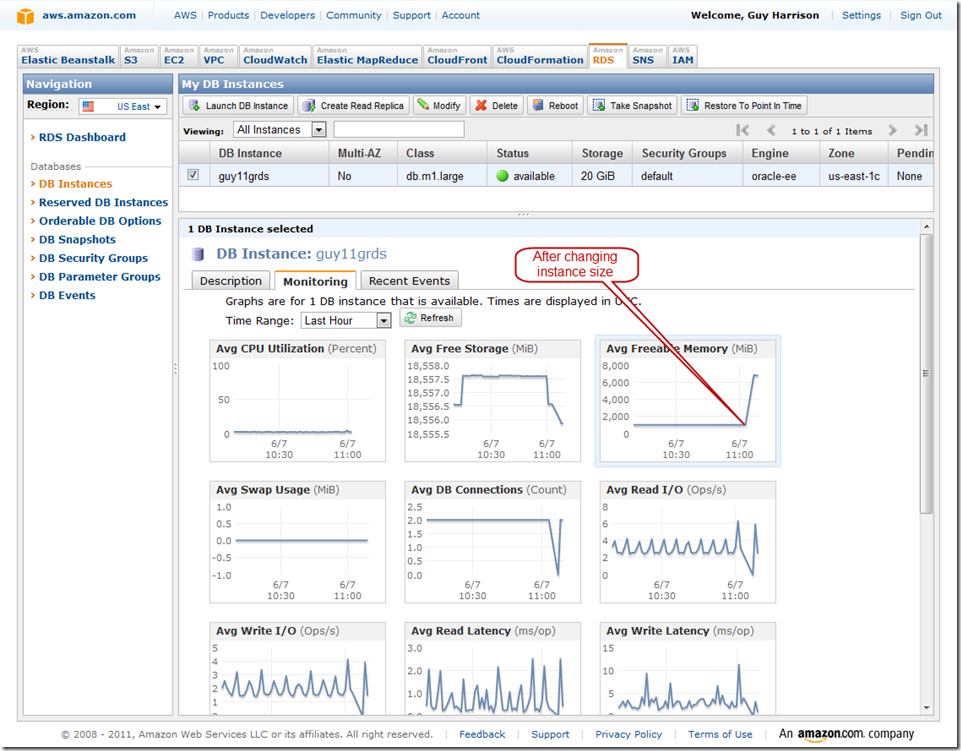

Although there’s no OEM and no way to run Spotlight or other 3rd party monitoring tools, there is a very basic monitoring screen.

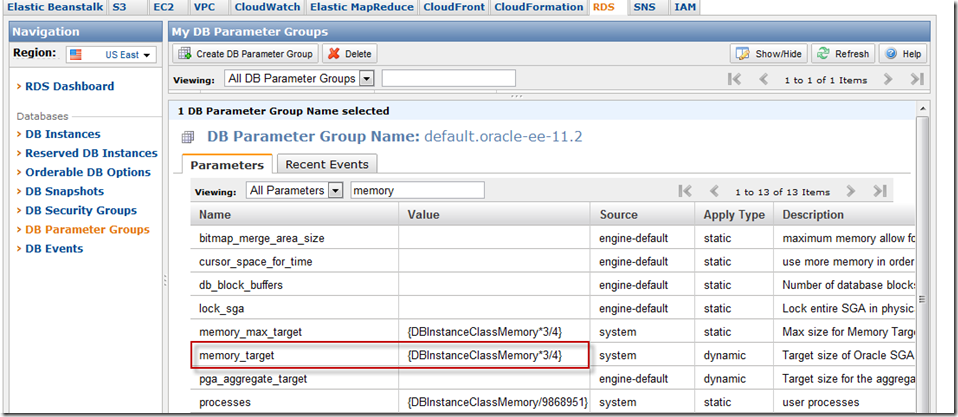

Parameters

Parameters are managed through parameter groups, which assign values dynamically. For instance, in the default parameter group, memory target is set to 75% of total system memory. If you reboot the system into a higher class, the memory will change. You can change the values for a parameter group and you can create your own.

Conclusion

End to end, it probably takes about 10 minutes to get set up once you have your account details. Not bad. And Amazon takes care of backups and the like.

However, it’s probably not something I’ll ever do again, because I can’t really cope with the idea of not being able to go directly to the alert log, trace files, etc. And I definitely don’t like not being able to use tools like Spotlight or even OEM. Amazon provides work arounds for lots of this stuff – see this page for instance – but I’m too used to the command line  . Still, it’s worth knowing how this all works and – who knows – it might be handy one day when I want to quickly set up a 64GB SGA database

. Still, it’s worth knowing how this all works and – who knows – it might be handy one day when I want to quickly set up a 64GB SGA database

Tuesday, September 27, 2011 at 4:09PM

Tuesday, September 27, 2011 at 4:09PM