Comparing Hadoop Oracle loaders

Thursday, October 6, 2011 at 12:55AM

Thursday, October 6, 2011 at 12:55AM Oracle put a lot of effort into highlighting the upcoming Oracle Hadoop Loader (OHL) at OOW 2011 – it was even highlighted in Andy Mendelsohn's keynote. It’s great to see Oracle recognizing Hadoop as a top tier technology!

However, there were a few comments made about the “other loaders” that I wanted to clarify. At Quest, I lead the team that writes the Quest Data Connector for Oracle and Hadoop (let’s call it the “Quest Connector”) which is a plug-in to the Apache Hadoop SQOOP framework and which provides optimized bidirectional data loads between Oracle and Hadoop. Below I’ve outlined some of the high level features of the Quest Connector in the context of the Oracle-Hadoop loaders. Of course, I got my information on the Oracle loader from technical sessions at OOW so I may have misunderstood and/or the facts may change between now and the eventual release of that loader. But I wanted to go on the record with the following:

- All parties (Quest, Cloudera, Oracle) agree that native SQOOP (eg, without the Quest plug-in) will be sub-optimal: it will not exploit Oracle direct path reads or writes, will not use partitioning, nologging, etc. Both Cloudera and Quest recommend that if are doing transfers between Oracle and Hadoop that you use SQOOP with the Quest connector.

- The Quest connector is a free, open source plug in to SQOOP, which is itself a free, open source software product. Both are licensed under the Apache 2.0 open source license. Licensing for the Oracle Loader has not been announced, but Oracle has said it will be a commercial product and therefore presumably not free under all circumstances. It’s definitely not open source.

- The Quest loader is available now (version 1.4), the Oracle loader is in beta and will be released commercially in 2012.

- The Oracle loader moves data from Hadoop to Oracle only. The Quest loader can also move data from Oracle to Hadoop. We import data into Hadoop from an Oracle database usually 5+ times faster than SQOOP alone.

- Both Quest and the Oracle loader use direct path writes when loading from Hadoop to Oracle. Oracle do say they use OCI calls which may be faster than the direct path SQL calls used by Quest in some circumstances. But I’d suggest that the main optimization in each case is direct path.

- Both Quest and the Oracle loader can do parallel direct path writes to a partitioned Oracle table. In the case of the Quest loader, we create partitions based on the job and mapper ids. Oracle can use logical keys and write into existing partitioned tables. My understanding is that they will shuffle and sort the data in the mappers to direct the output to the appropriate partition in bulk. They also do statistical sampling which may improve the load balancing when you are inserting into an existing table.

- The Quest loader can update existing tables, and can do Merge operations that insert or updates rows depending on the existence of a matching key value. My understanding is that the Oracle loader will do inserts only - at least initially.

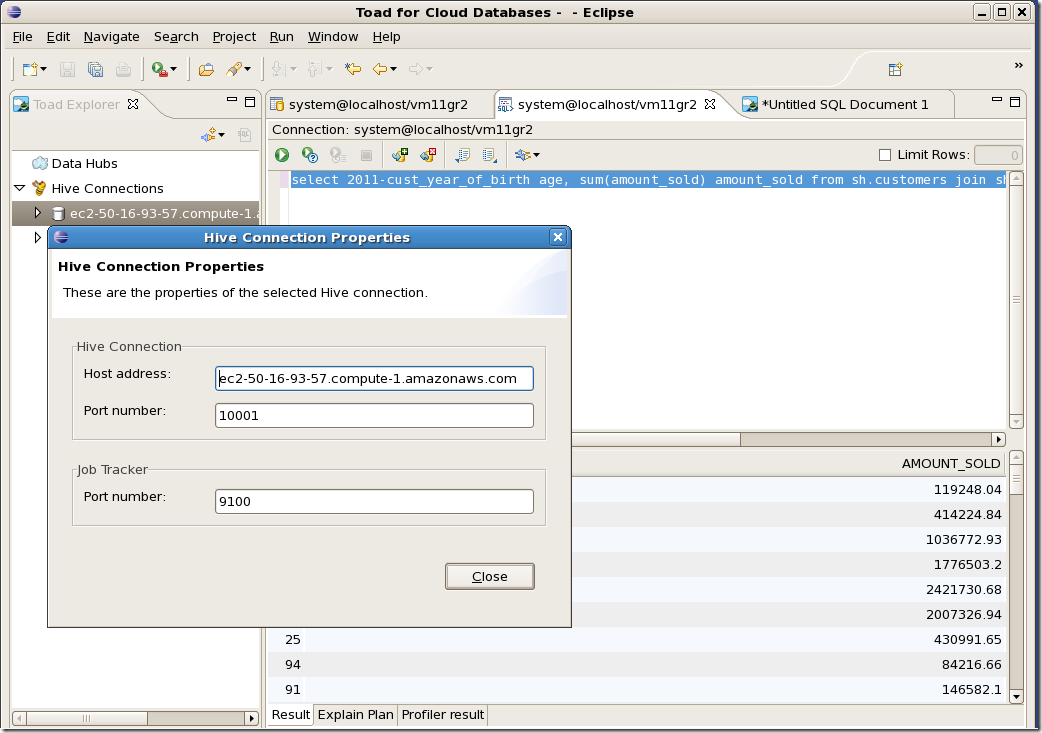

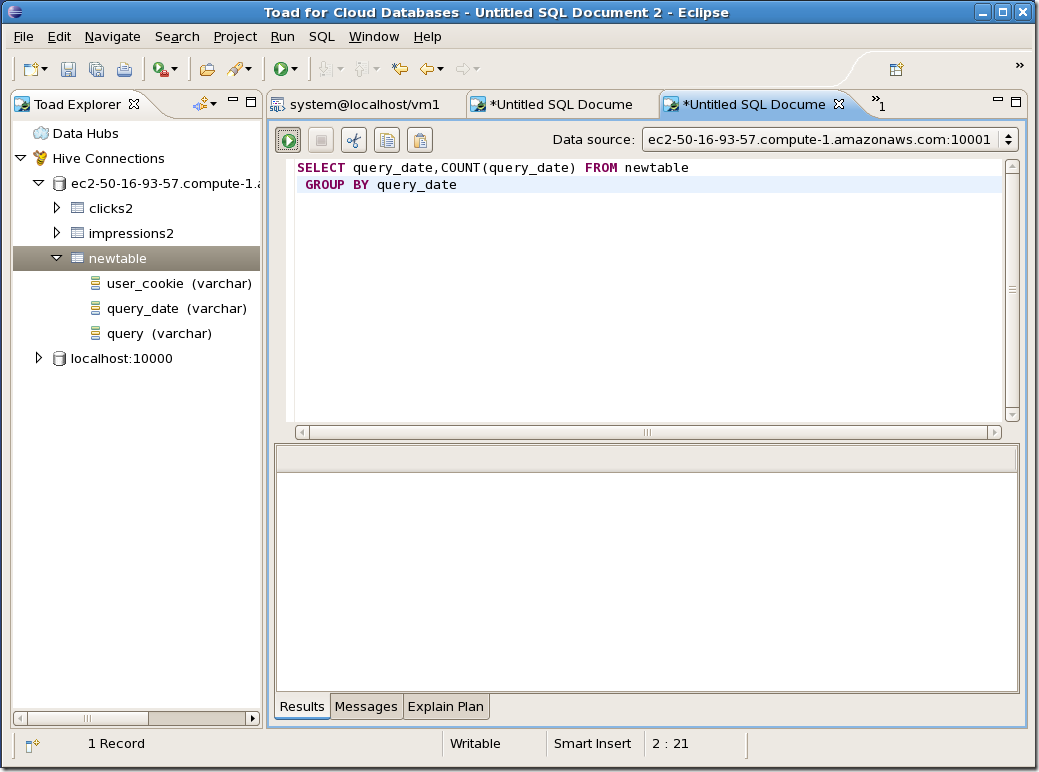

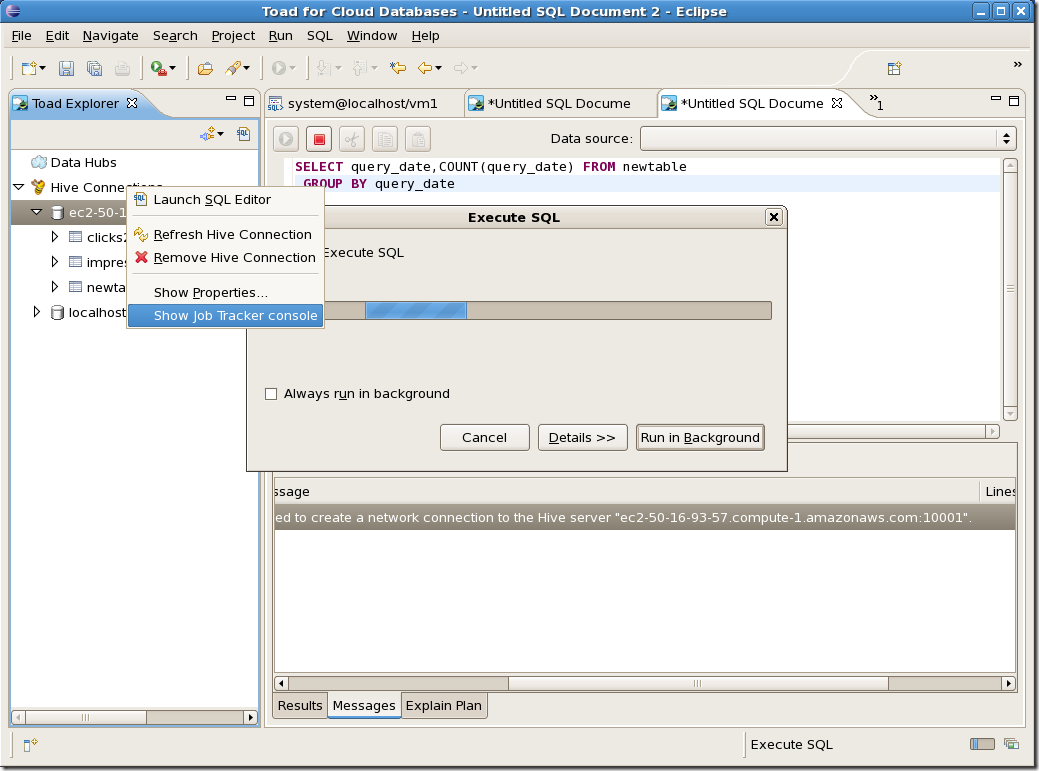

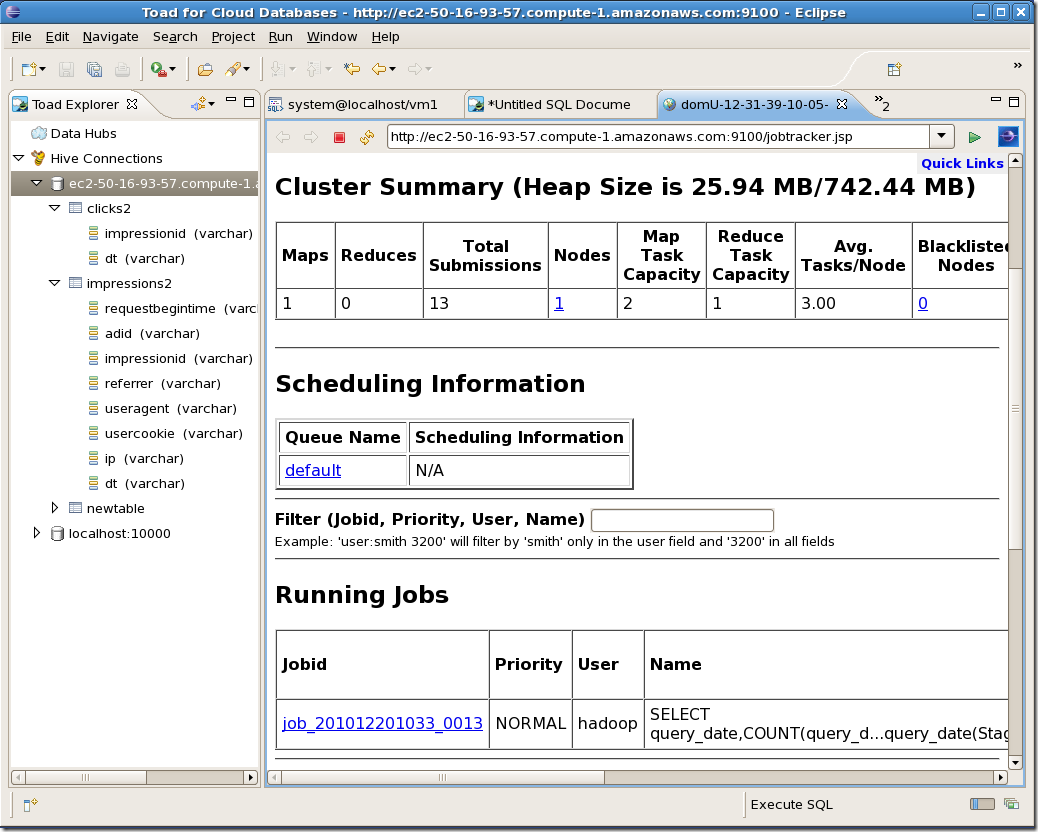

- Both the Quest connector and the Oracle loader have some form of GUI. The Oracle GUI I believe is in the commercial ODI product. The Quest GUI is in the free Toad for Cloud Databases Eclipse plug-in. I’ve put a screenshot of that at the end of the post.

- The Quest connector uses the SQOOP framework which is a Apache Hadoop sub-project maintained by multiple companies most notably Cloudera. This means that the Hadoop side of the product was written by people with a lot of experience in Hadoop. Cloudera and Quest jointly support SQOOP when used with the Quest connector so you get the benefit of having very experienced Hadoop people involved as well as Quest people who know Oracle very well. Obviously Oracle knows Oracle better than anyone, but people like me have been working with Oracle for decades and have credibility I think when it comes to Oracle performance optimization.

Again, I’m happy to see Oracle embracing Hadoop; I just wanted to set the record straight with regard to our technology which exists today as a free tool for optmized bi-directional data transfer between Oracle and Hadoop.

You can download the Quest Connector at http://bit.ly/questHadoopConnector. The documentation is at http://bit.ly/QuestHadoopDoc.